05. Quiz: TensorFlow Softmax

TensorFlow Softmax

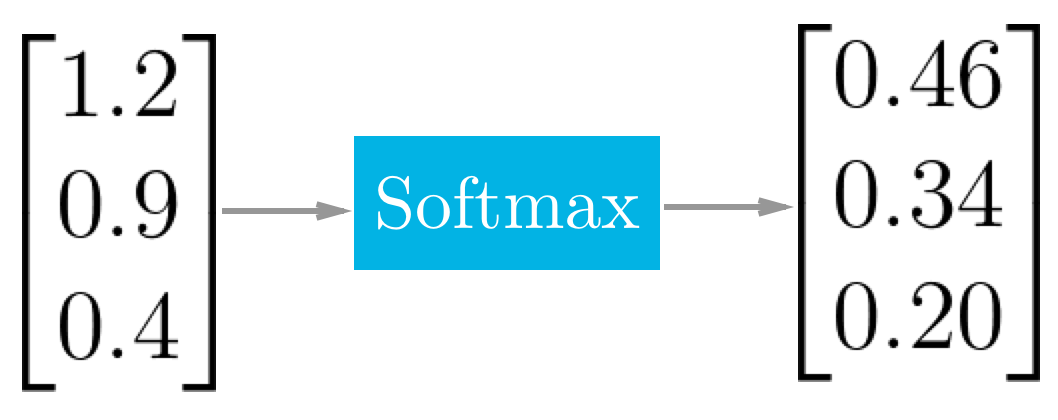

The softmax function squashes it's inputs, typically called logits or logit scores, to be between 0 and 1 and also normalizes the outputs such that they all sum to 1. This means the output of the softmax function is equivalent to a categorical probability distribution. It's the perfect function to use as the output activation for a network predicting multiple classes.

Example of the softmax function at work.

TensorFlow Softmax

We're using TensorFlow to build neural networks and, appropriately, there's a function for calculating softmax.

x = tf.nn.softmax([2.0, 1.0, 0.2])Easy as that! tf.nn.softmax() implements the softmax function for you. It takes in logits and returns softmax activations.

Quiz

Use the softmax function in the quiz below to return the softmax of the logits.

Start Quiz:

User's Answer:

(Note: The answer done by the user is not guaranteed to be correct)